Modern intelligent systems increasingly incorporate facial analytics, such as age, gender, ethnicity, and emotion prediction, from images and videos. This increase in interest is propelled by numerous research papers unveiling sophisticated techniques, many harnessing the power of deep convolutional neural networks (CNN).

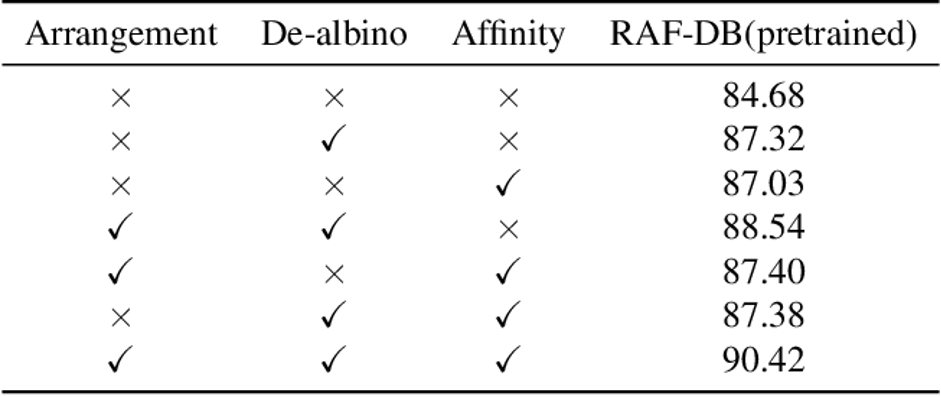

One of the best results currently is provided by the pyramid with super-resolution (PSR) with the extensive VGG-16 network combined with deep attentive center loss (DACL) and the ARM method. This approach showcases top-tier accuracy by harnessing facial representations from ResNet-18 via de-albino and affinity.

In our approach to smile detection, we use the confidence score of the "happy" class as the smile score. This isn't a conventional method, but it's rooted in a straightforward principle: the intensity of a smile often parallels the degree of happiness. Imagine a person with a wide grin, radiating joy, you'd likely perceive with a high confidence a happy face. Conversely, someone with a faint smile might register a lower score. By leveraging these confidence scores, we reached a narrow but precise inference of smiles, ranging from slight grins to full laughs, providing a more detailed insight into the emotion detected.

Challenges

Achieving high Query Per Second (QPS) and ensuring rapid response times are crucial for real-time applications. However, these are often impacted by the complexity of neural networks, making deploying on edge devices challenging. Furthermore, the quest for lightweight models, which are essential for edge deployments, sometimes compromises accuracy, resulting in an illusive balance to strike.

Our results achieved a model size of 8MB, with over 95.71% success rate on the happy image classification task using the AffectNet-HQ dataset. For more information, check out our online API.

Conclusion

The research of emotion detection has made progress with innovative neural network models, showing a future where our devices understand our commands and emotions. With continual research and refinement, soon we'll be seamlessly interacting with devices that truly "see" our emotions. And that thought alone is enough to put a smile on anyone's.

References:

Savchenko, A. V. (2021). Facial expression and attribute recognition. SISY, IEEE.

Farzaneh, A.H., Qi, X.(2021): Facial expression recognition in the wild via deep attentive center loss. IEEE

Shi, J., Zhu, S. (2021). Learning to amend facial expression representation via de-albino and affinity.

Wen, Z., Lin, W., Wang, T., Xu, G. (2021). Distract your attention: Multi-head cross-attention network for facial expression recognition.

Savchenko, A. (2023). Facial Expression Recognition with Adaptive Frame Rate. ICML, PMLR.